Abstract

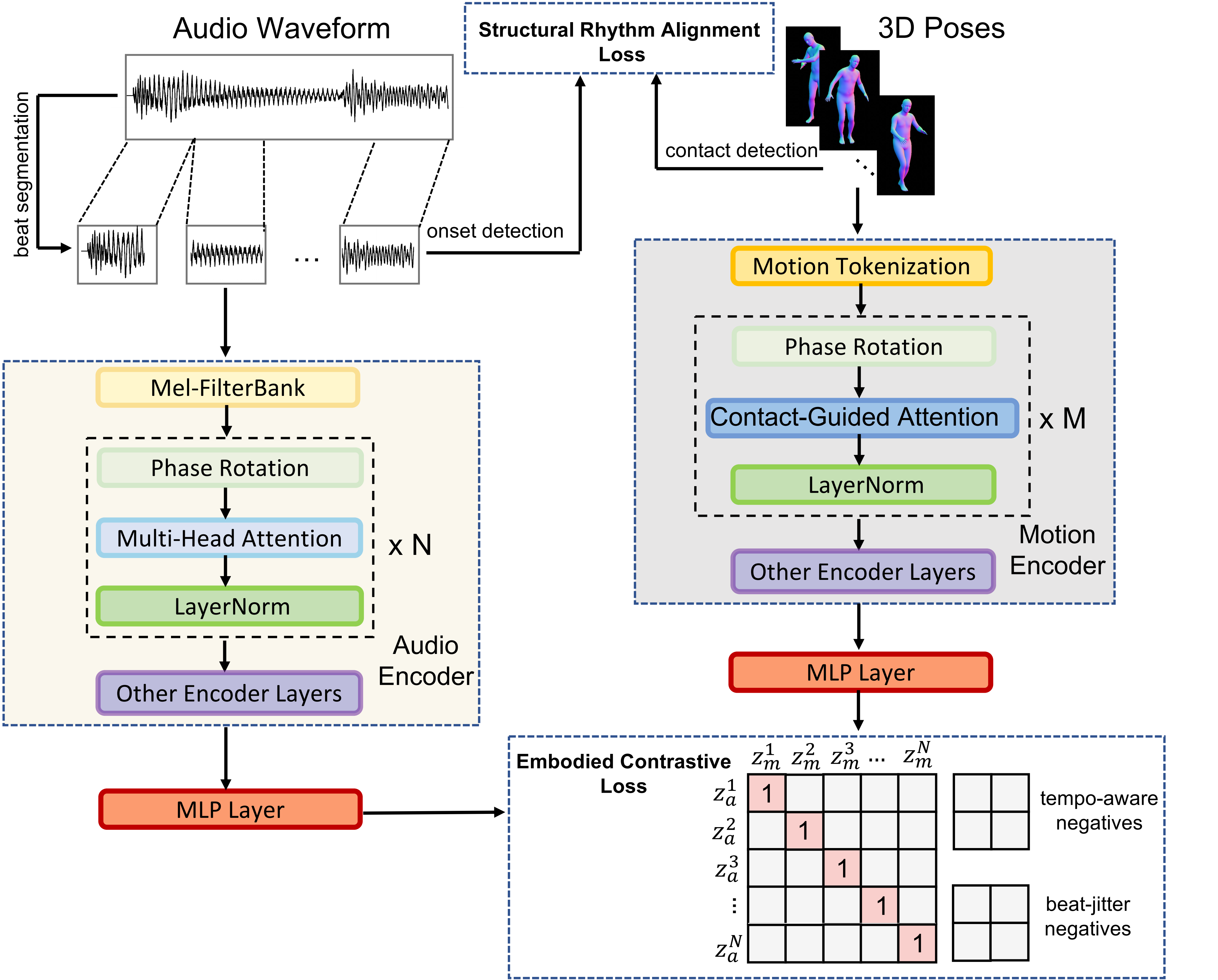

Music is both an auditory and an embodied phenomenon, closely linked to human motion and naturally expressed through dance. However, most existing audio representations neglect this embodied dimension, limiting their ability to capture rhythmic and structural cues that drive movement. We propose MotionBeat, a framework for motion-aligned music representation learning. MotionBeat is trained with two newly proposed objectives: the Embodied Contrastive Loss (ECL), an enhanced InfoNCE formulation with tempo-aware and beat-jitter negatives to achieve fine-grained rhythmic discrimination, and the Structural Rhythm Alignment Loss (SRAL), which ensures rhythm consistency by aligning music accents with corresponding motion events. Architecturally, MotionBeat introduces bar-equivariant phase rotations to capture cyclic rhythmic patterns and contact-guided attention to emphasize motion events synchronized with musical accents. Experiments show that MotionBeat outperforms state-of-the-art audio encoders in music-to-dance generation and transfers effectively to beat tracking, music tagging, genre and instrument classification, emotion recognition, and audio–visual retrieval.

Method

Architecture of MotionBeat. Audio and motion inputs are converted into beat-synchronous tokens and passed through encoders with bar-equivariant phase rotations and contact-guided attention. Embeddings are trained with the Embodied Contrastive Loss (ECL), while auxiliary rhythm heads provide onset envelopes and contact pulses for the Structural Rhythm Alignment Loss (SRAL). The total loss integrates both objectives to learn motion-aligned music representations.